To process thousands of items in Power Automate without hitting performance issues or throttling, you should control the flow's concurrency settings.

1. How can I limit how many items are processed at the same time?

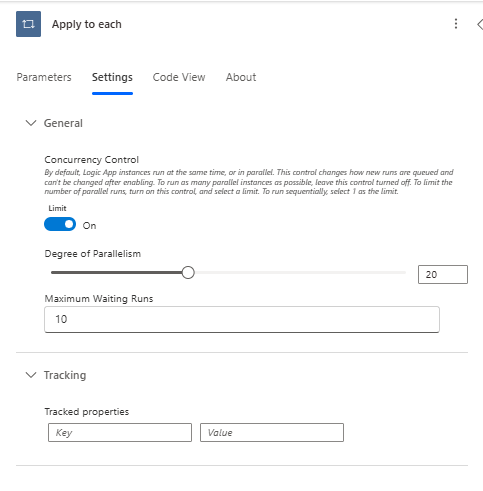

You can limit parallel processing by turning on Concurrency Control and setting a Degree of Parallelism (number of items processed at the same time). This can be done in:

- The Apply to each loop

- The trigger settings (if needed)

2. Where do I configure concurrency settings?

For Apply to each:

- Click the three dots (...) in the top-right corner of the Apply to each loop.

- Select Settings.

- Enable Concurrency Control.

- Set a safe value (e.g., 1–10) depending on your backend system’s limits.

For the trigger (optional):

If your flow is triggered very frequently (e.g., on item created/modified), you can also limit how many flow runs can happen at once:

- Click on the trigger (e.g., When an item is created or modified).

- Click Settings.

- Turn on Concurrency Control and adjust the degree accordingly.

3. What’s a safe concurrency value for thousands of records?

There’s no one-size-fits-all number. But in general:

- Start with 1–5 for sensitive systems (like SharePoint or SQL).

- If the target system can handle load, you can go up to 10 or 20, but always test it.

- Avoid 50 or more unless you’re sure your backend can scale.

- Lower values = more stability, but longer execution times.

4. Will reducing concurrency slow down the flow?

Yes, it will process fewer items at the same time, so the total duration may increase. However, the flow becomes:

- More stable

- Less likely to fail or time out

- Better at respecting service limits

- It’s a trade-off between performance and reliability.

5. Microsoft Best Practices for Bulk Data Processing

- Use Pagination when pulling large data sets (e.g., List rows/Get items).

- Turn off concurrency or keep it low if your flow writes to data sources like SharePoint, SQL, or Excel.

- For large operations, consider chunking your data manually (e.g., using filters or batches).

- If using Dataverse or SQL, consider Stored Procedures or custom connectors for better performance.

See Also